A faster website with PHP cache, Redis and Memcached

Do you have a dynamic website which is built with MySQL and PHP? If your website and audience is growing, there might be a problem with the website’s performance. Without any caching mechanism your website becomes slow if your website gets more visitors than the web server can handle. Do you like to know more about PHP caching, Redis and Memcached? Keep reading…

Why does your website need a PHP cache?

A dynamic website without caching can’t handle a lot of visitors. If a visitor access a dynamic website, all database queries and PHP script executions will use RAM memory and CPU power. Since all server resources are limited, your web server and website will become slow or unavailable.

For example…

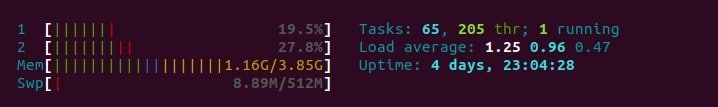

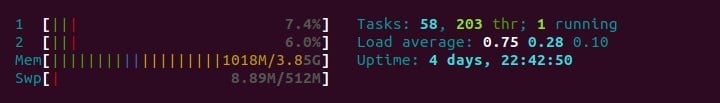

We tested a page from a website build with WordPress. We did the first test without caching enabled. During the test both CPU’s need to work harder and the load was a bit high. At the moment that 50 concurrent users visited the website, 15 PHP processes have been used. The URL load time (95th percentile) was above 100 ms.

Next we did the same test with caching enabled. The load was much lower and the CPU was not used at all. Only 5 PHP processes have been used at the moment that 50 concurrent users have visited the site. The cached version was much faster with less than 50 ms, URL load time (95th percentile).

The difference is not so big, except for the number of PHP processes (15 vs 5).

What kind of PHP cache can you use to speed up your website?

There are different kinds of cache types and all of them have different functions. Our list includes only server side cache types which are used together with PHP scripts. Other cache types like browser cache, database cache or proxy cache are not the scope of this article.

Full page caching

Page caching is the most important PHP cache type. Like the name suggests, the whole PHP page will be cached. In most cases the cached page version is getting stored for a specific time on the server’s hard disk.

If you search for “php cache” you will find on top the PHP Cache website. The PHP cache company is the host for different PHP cache libraries and adapters for APC, Redis, MemCached, FileSystem and many others. Their PHP libraries are not very well maintained during the last time and their advice is to use for new projects the Symfony Cache Component.

Sinds most professional libraries are parts of the bigger PHP frameworks, it’s not so easy to find a good PHP cache library. But I found one…

PageCache

This cache library is maybe the best choice for the beginning PHP developer. It’s easy to install and you can adapt the necessary code to your existing PHP scripts. Even if your PHP based website is older and written with procedural code, PageCache is a good choice. Below is a short summary of the most important characteristics.

- Works out of the box with zero configuration!

- PSR-16 compatible cache adapter (Redis or Memcached).

- Built-in page caching for mobile devices.

PageCache PHP example

Like I said, using PageCache is simple. Just install the library using Composer and add the following PHP code snippet at the top of your PHP script and the PHP cache library will create and provide the cached file.

PHP page cache and popular frameworks

If you start a new project you should always consider using a PHP framework like Laravel, Symfony, CodeIgniter, Yii or Zend. They offer all their own PHP cache plugin or component. If you use a PHP framework you don’t need to write all the code and because the most important libraries are already bundled. You don’t need to choose (and test) all the required libraries by yourself.

Most of the PHP cache components are able to cache whole PHP pages and also provide adapters for an object cache with Redis or Memcached.

Object caching

While caching a whole page happens on your file system, the object cache works completely differently. Object caching is mostly used to store strings, arrays objects or even database result sets. These objects are stored in the RAM memory and sometimes in a database. For this blog post I will tell you more about two storage systems you can use as object cache: Memcached and Redis.

What is Memcached?

Memcached was originally developed in 2003 by Brad Fitzpatrick. This is pretty old, in the same year we have been using PHP 4 and MySQL 3.2.3. You can use Memcached with different programming languages and for PHP there are 2 extensions, one is called like the Object cache and the other one is called Memcache (note the missing “d”). Don’t use the last one, it’s not very well maintained.

Memcached is very fast and can help you to speedup your website while keeping the load on your database on a low(er) level.

PHP Memcached example

Adding and reading objects is very easy and takes less code than adding / getting the same information from a MySQL database.

Redis object cache

Redis is an in-memory data structure store, mostly maintained by Salvatore Sanfilippo and sponsored by Redis Labs. You can install the phpredis extension using PECL. Currently you can’t find any information about this extension via the PHP documentation. That’s not really a problem, because Redis is very popular in the development world and there is online a lot of information available.

PHP Redis example

The functions or methods are very similar to the code we used for Memcached.

Which object cache you choose is up to you, both are fast and easy to use. Personally I like the structured PHP documentation for Memcached.

You understand why PHP libraries and frameworks are using adapters for Redis or Memcached. You can use the same methods to store your data in different object cache systems.

Fragment Caching

This cache looks a bit like page caching, but is used to create a cached version for parts of a web page. This is very useful if some part of the page needs to be dynamic. A very simple example is the text “Hello user name” on a portal website, where all information is the same for all users except the name.

You can store your code fragments like the page cache via the file system or you can an object cache.

PHP OpCache

This PHP cache works differently. It doesn’t cache the generated HTML code like a page cache, but the script’s PHP code. PHP OpCache will compile the PHP code and stores this version inside the RAM memory. This way PHP is able to process PHP code much faster while using less CPU power. Some important OpCache PHP functions are:

- Opcache_compile_file – Compiles and caches a PHP script without executing it.

- opcache_is_script_cached – Tells whether a script is cached in OPCache.

- Opcache_invalidate – Invalidates a cached script.

PhpFastCache

This PHP cache library is one of the bigger projects and is not part of some framework. If you need a cache for a high traffic website, PhpFastCache is the library you need. You can use the library for different cache types like a file cache, SQLite, MongoDB, Redis and Memcached.

PhpFastCache code example

With the following code we get the same object as for the Memached code example. It’s a bit more code, but if you change some of the values, you can use the same code for Redis or another cache system.

PHP cache and WordPress

Like this WordPress website (or blog) I do a lot of web development work with WordPress these days. There are a couple of mature cache plugins for creating a full page cache and also some plugins for object caching with Redis or Memcached. WordPress has its own object cache interface. That will say if you connect the object cache API with Memcached and you store some value inside an object, the value is not stored in the database, but in Memcached.

Here is a list of WordPress cache plugins I use frequently.

WP Super Cache

If your server is based on Apache, this page cache plugin is super fast and stable. It’s maintained by Automattic, the company behind WordPress. It doesn’t offer object caching, but it supports fragment cache (for the advanced user).

WP Rocket

This WordPress cache plugin is also a full page cache plugin, but it has some nice extras (Cloudflare integration, user cache…) and a very nice interface. This plugin is not free, but in my opinion worth every penny. I use WP rocket for several websites and the plugin saved me 1 second of load time (compared to WP Super Cache).

Simple Cache

This simple cache plugin developed by Taylor Lovett is not so popular, but has an almost unique feature: Object cache (Memcached or Redis). It doesn’t have a lot of options, just enable the cache and you’re done.

I found Simple cache while I was looking for a plugin that plays nicely with Nginx. With Simple Cache, there is only one row you need to change in your Nginx configuration. You can use the other two plugins for Nginx as well, but you need to change (and test) more Nginx configurations. Ask me if you would like to know what I’ve changed in Nginx for WP Rocket.

Conclusion

Which PHP cache type is the best, depends on your web application, the server configuration and the possible server load. PHP caching is often not a simple task. If you like to do something simple, just use WordPress and one of the available cache plugins.

Published in: PHP Scripts · Website Development

I just use Nginx FastCGI Cache and autoptimize. Is it enough or should add more plugins?

Hi Robi,

right now I’m using exact the same combination for this blog. Yes, the combination of both is fast/good enough for most of the WordPress websites online (with NGINX based hosting of course).

Speedy insights! Your blog on why you should cache your PHP website is a compelling read. Clear reasons for boosting performance and optimizing user experience. Thanks for highlighting the importance of caching in PHP web development!